Comparison between Classical and LeafLib model — evaluating training performance, efficiency, and optimization potential.

Published: October 2025

Dataset Volume

30,000 × 41 samples

Training GPU

Apple M1 Pro (16-core GPU, Metal / MPS acceleration)

Model Parameters

Classical Model: ~ 9.4k

LeafNet Model: ~ 1.4k

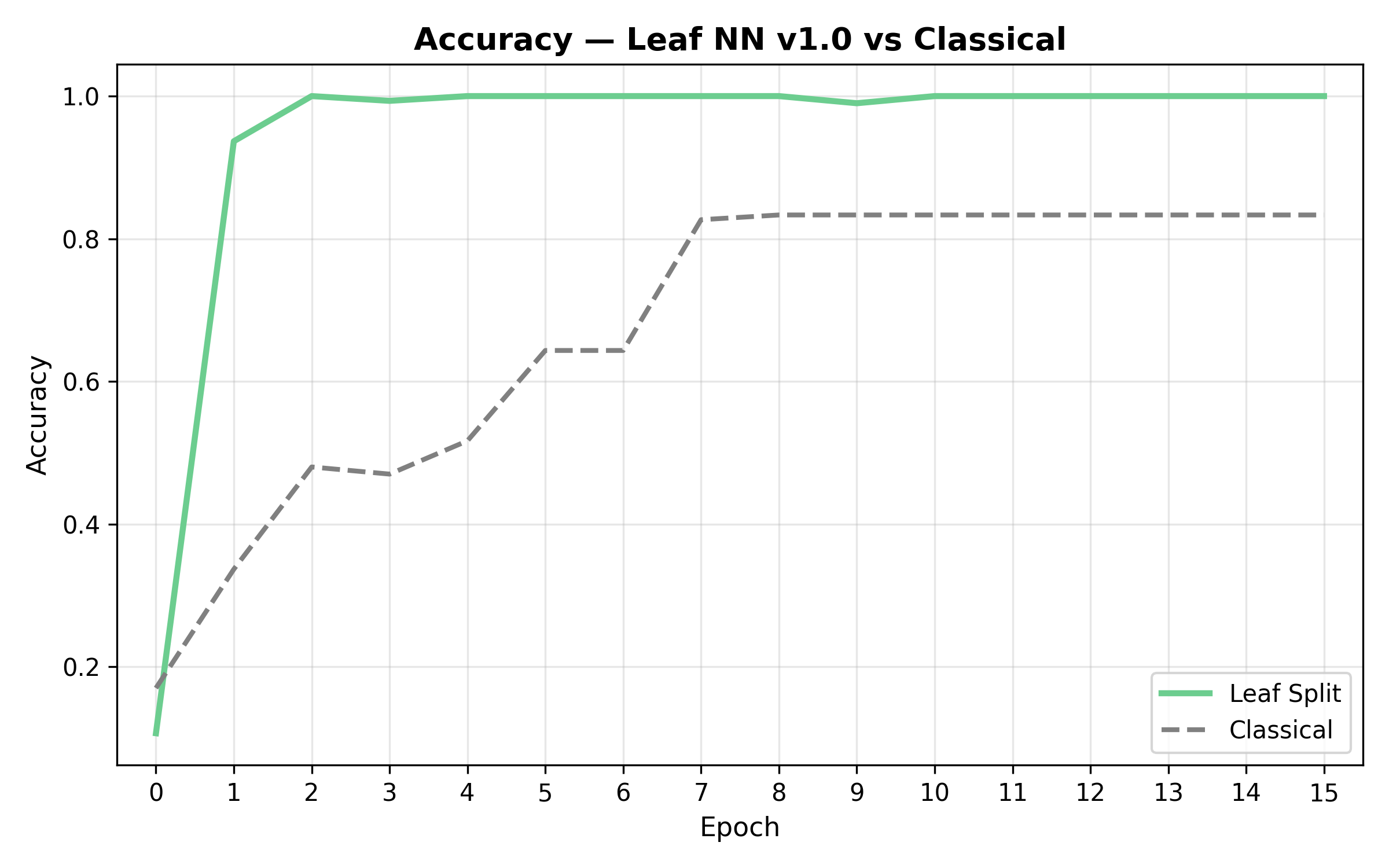

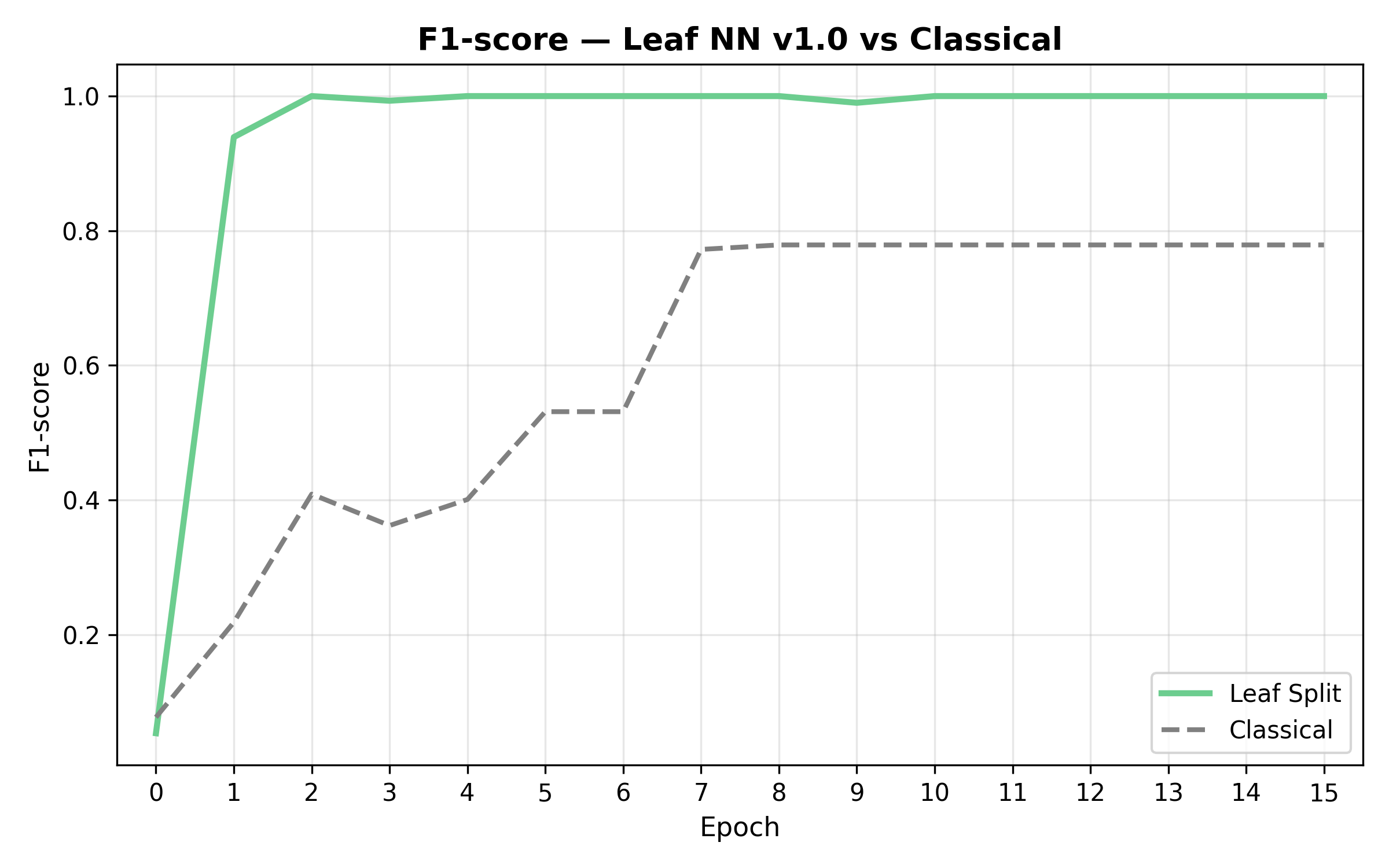

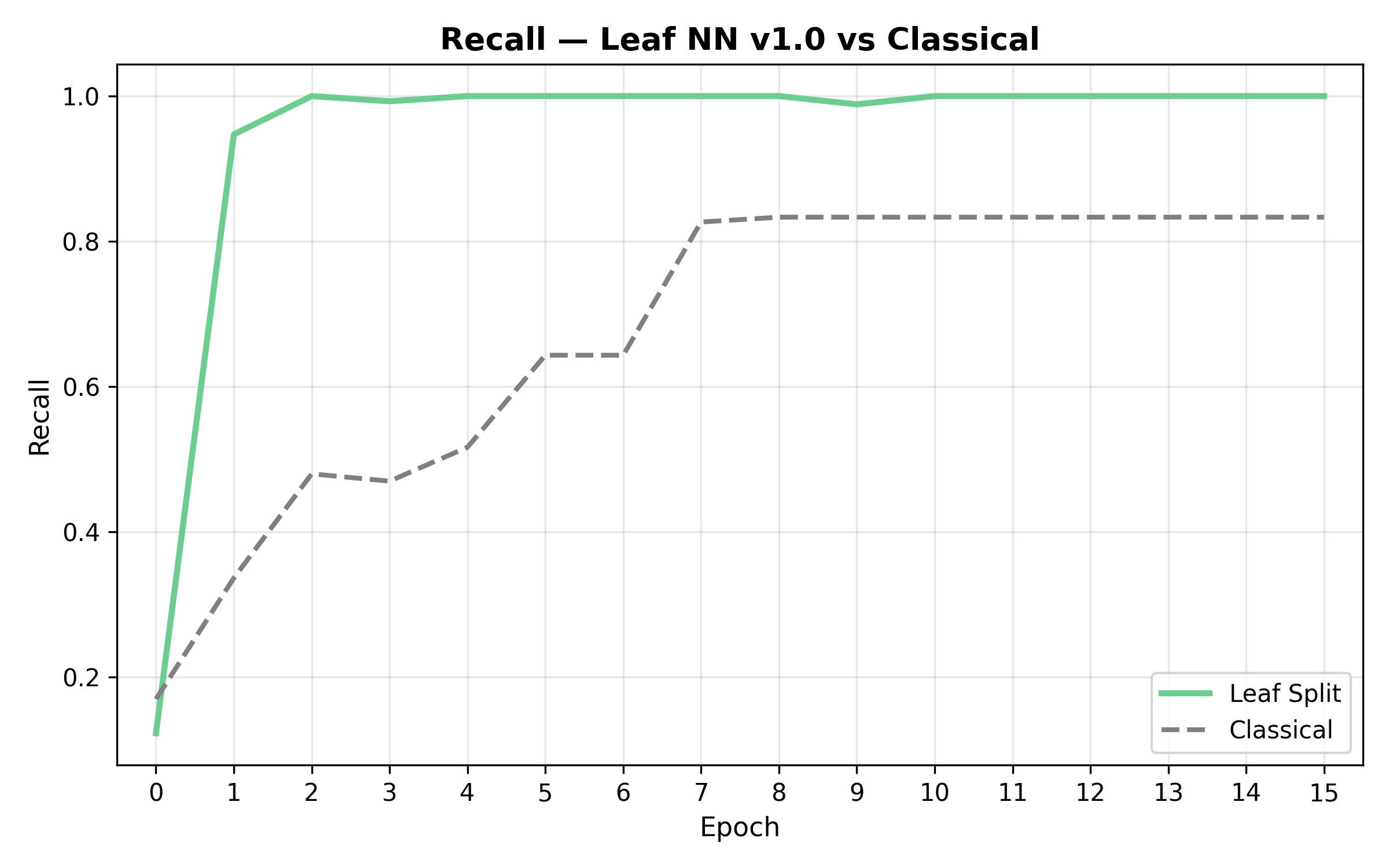

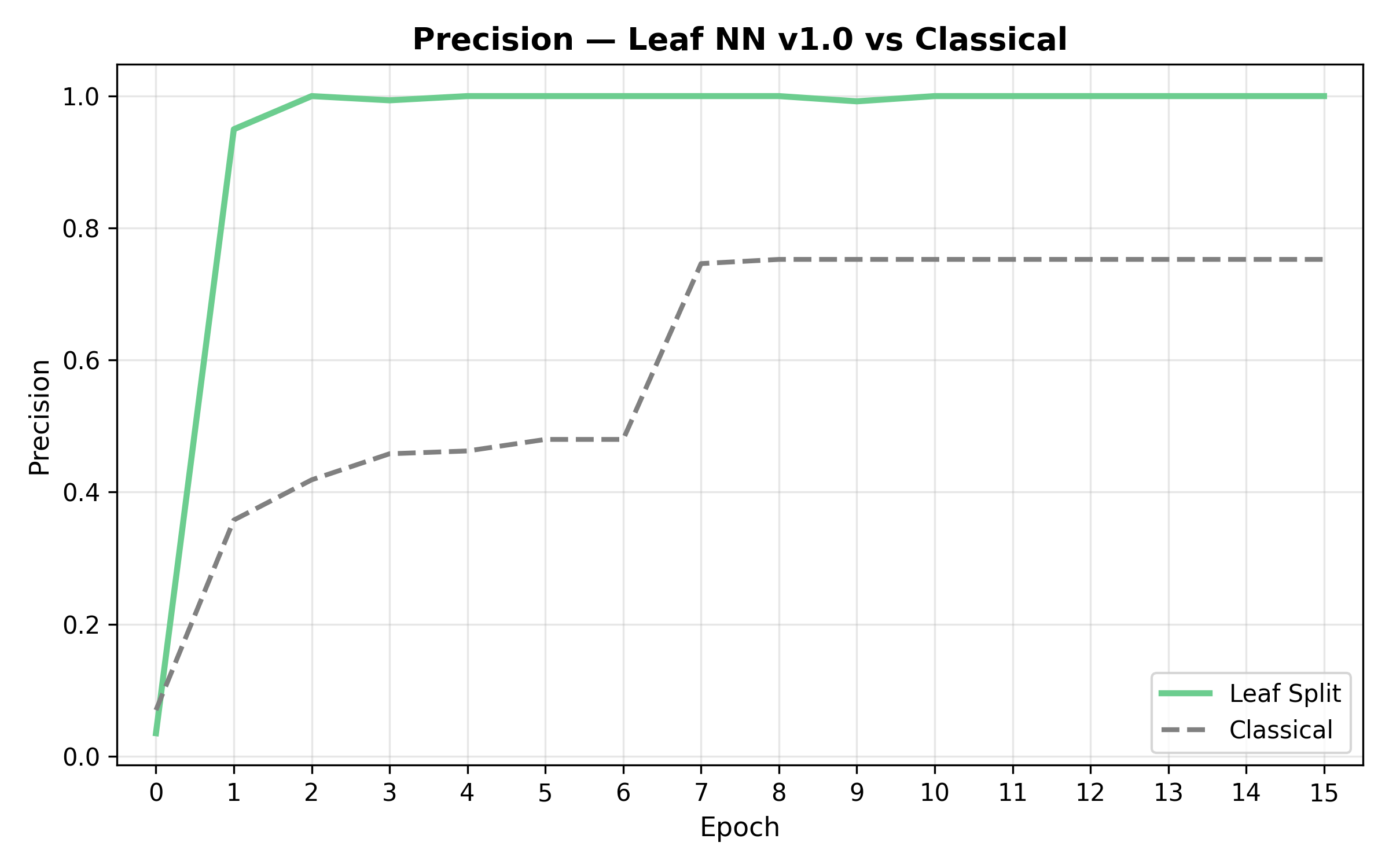

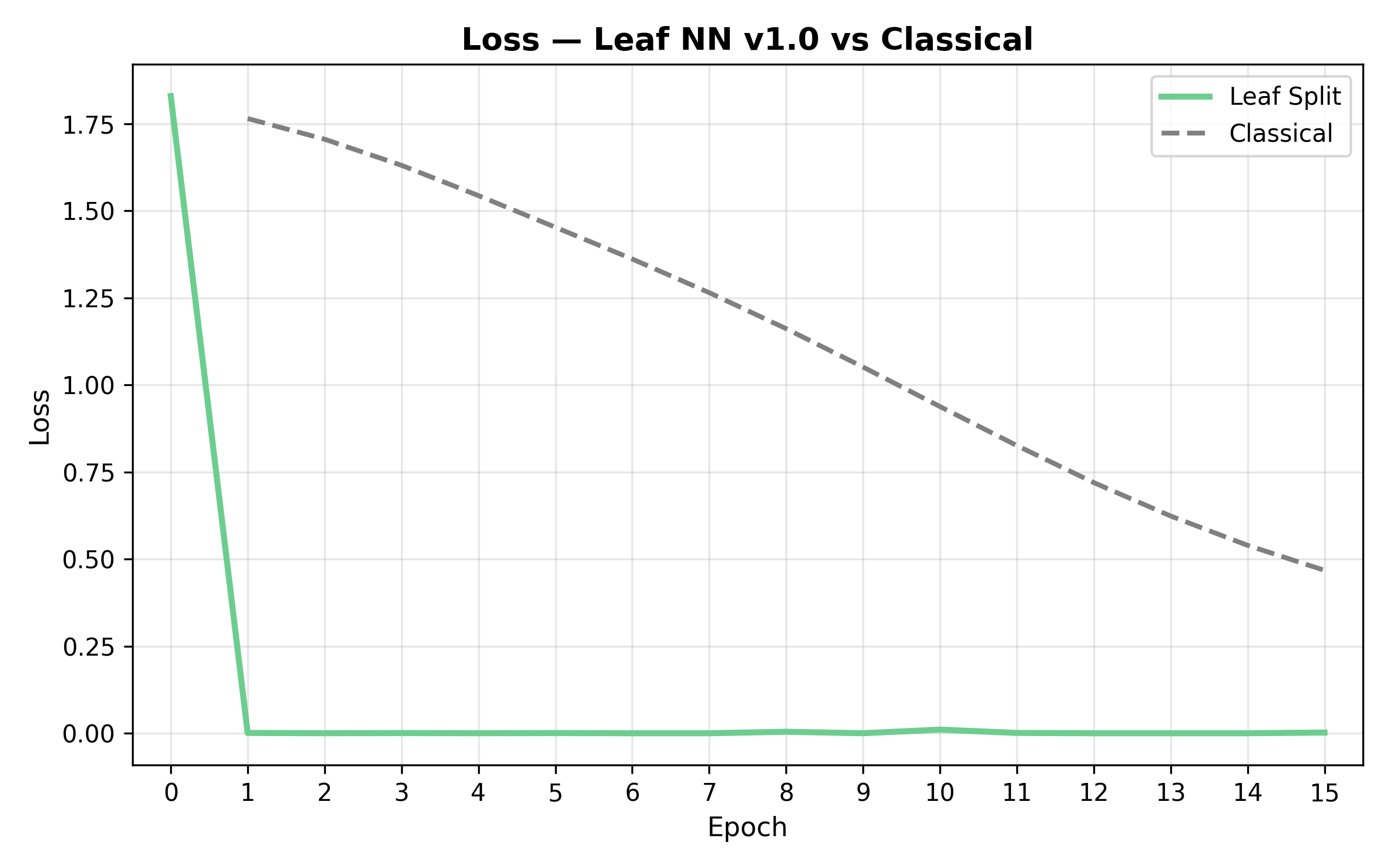

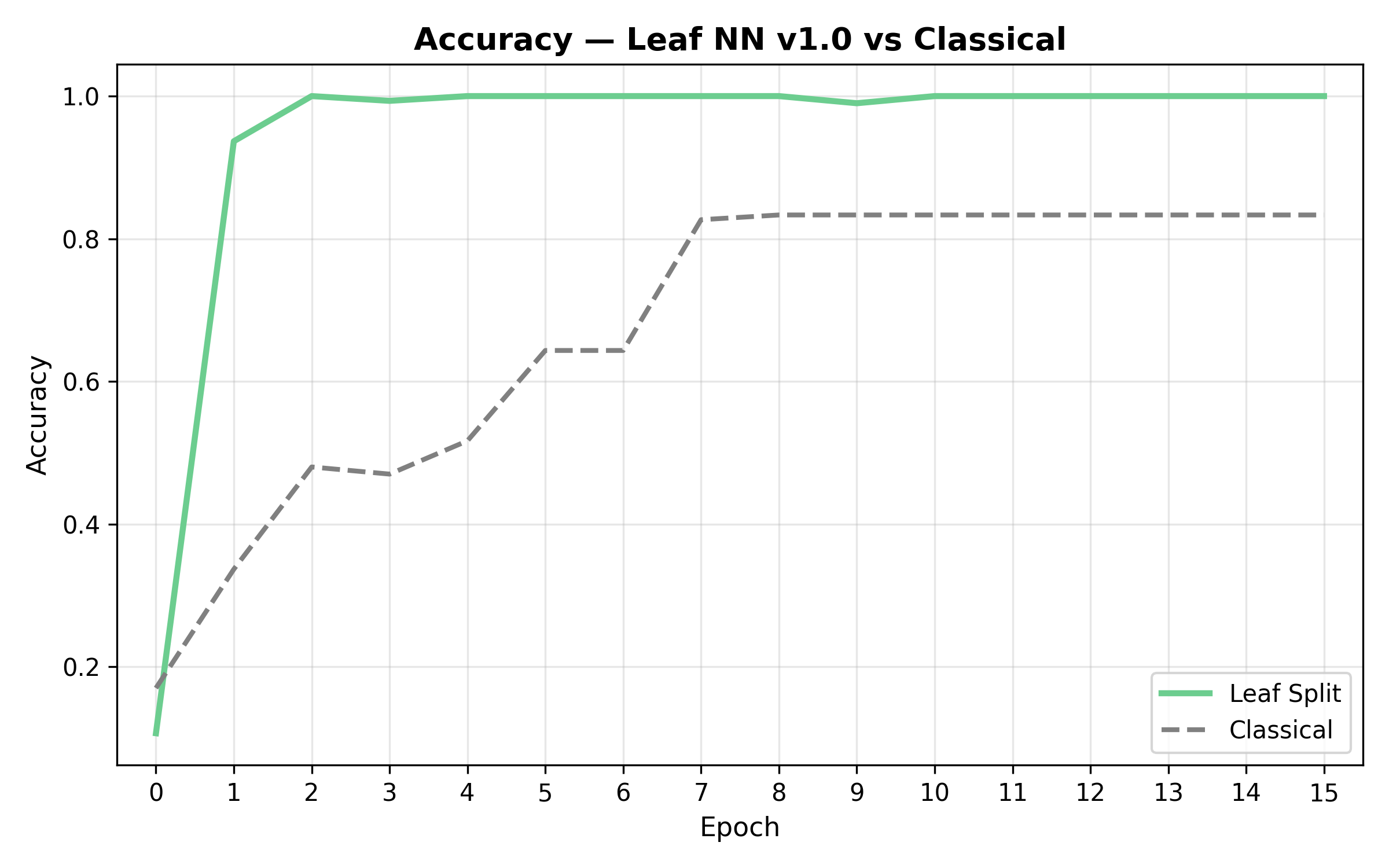

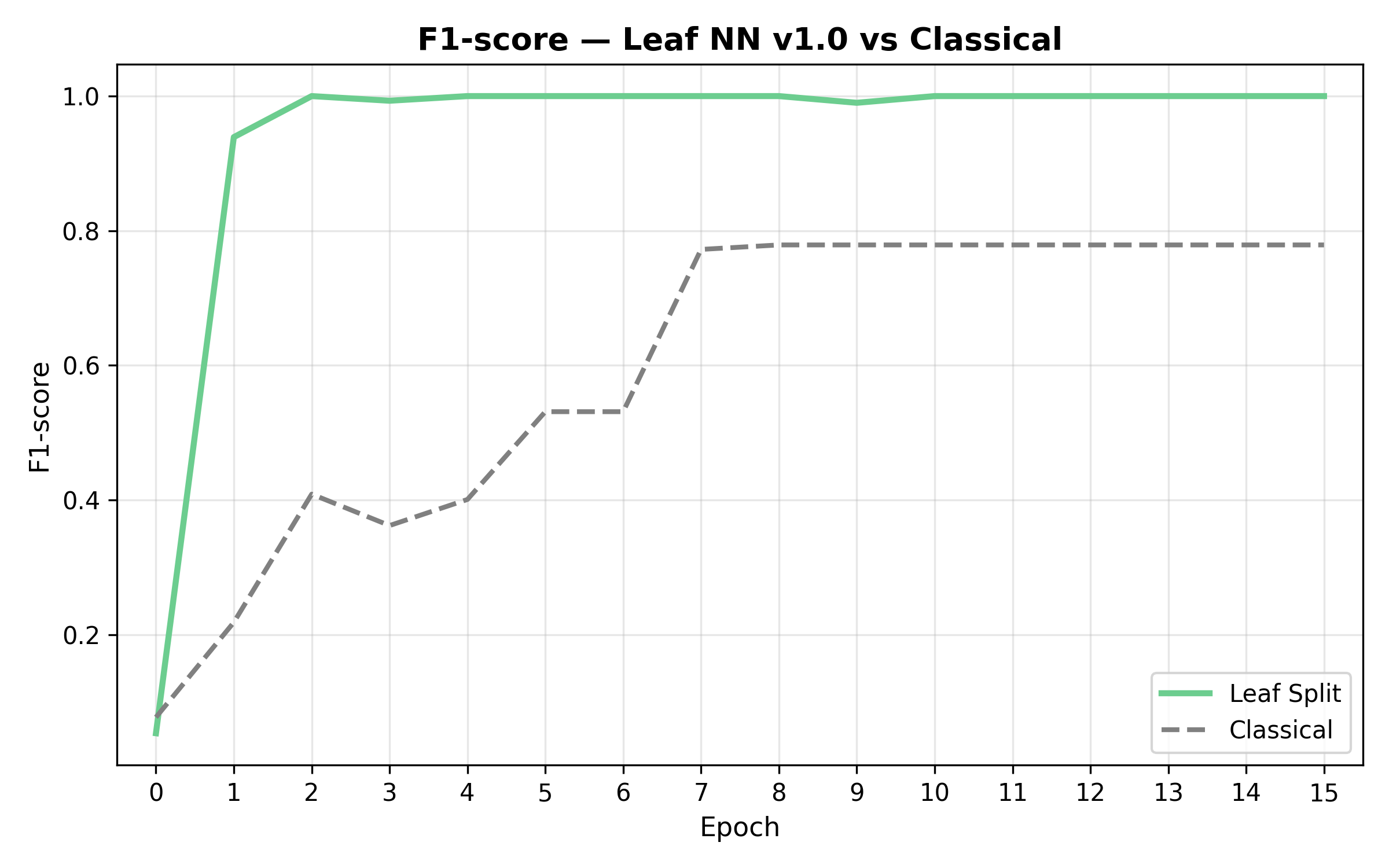

Accuracy

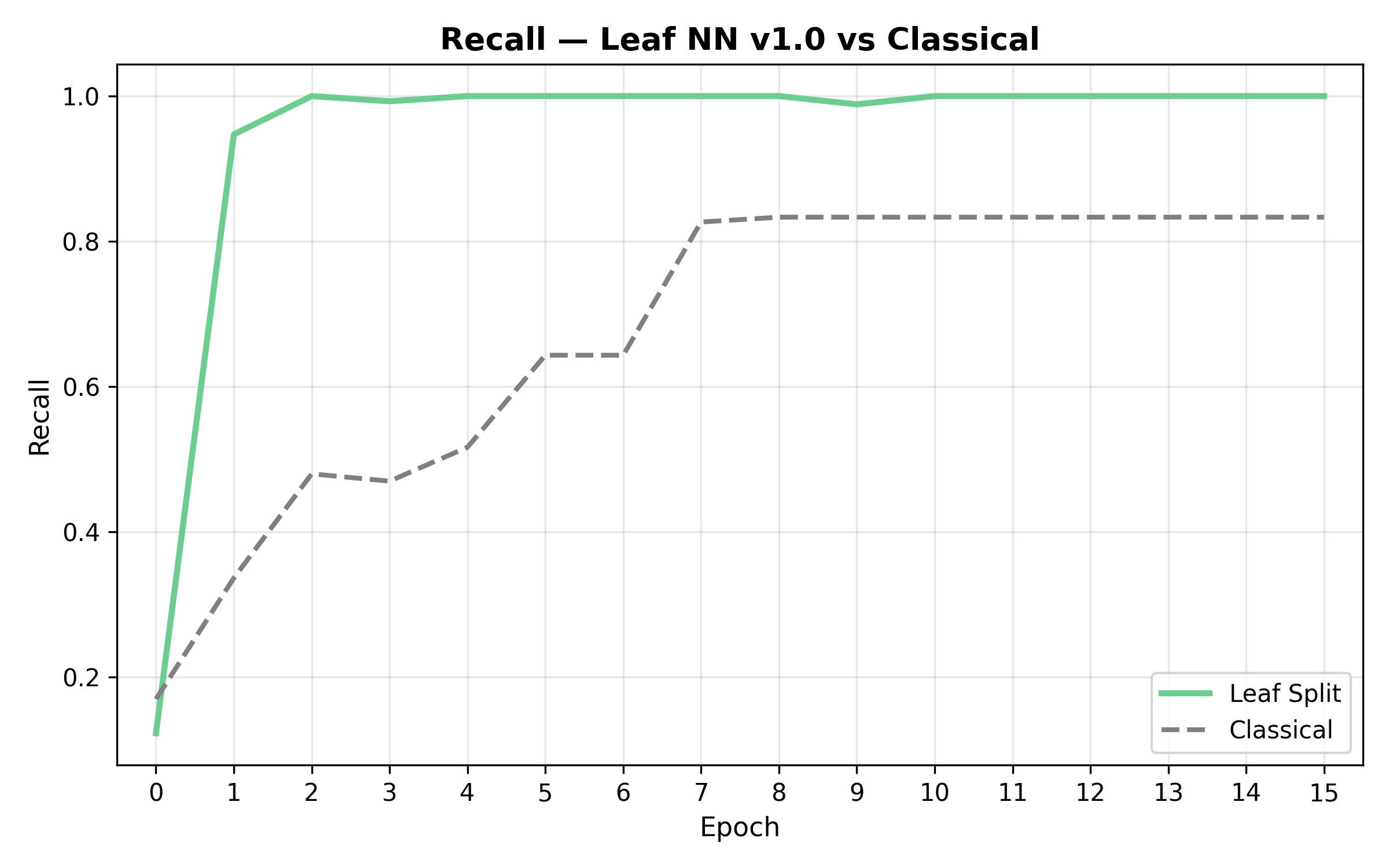

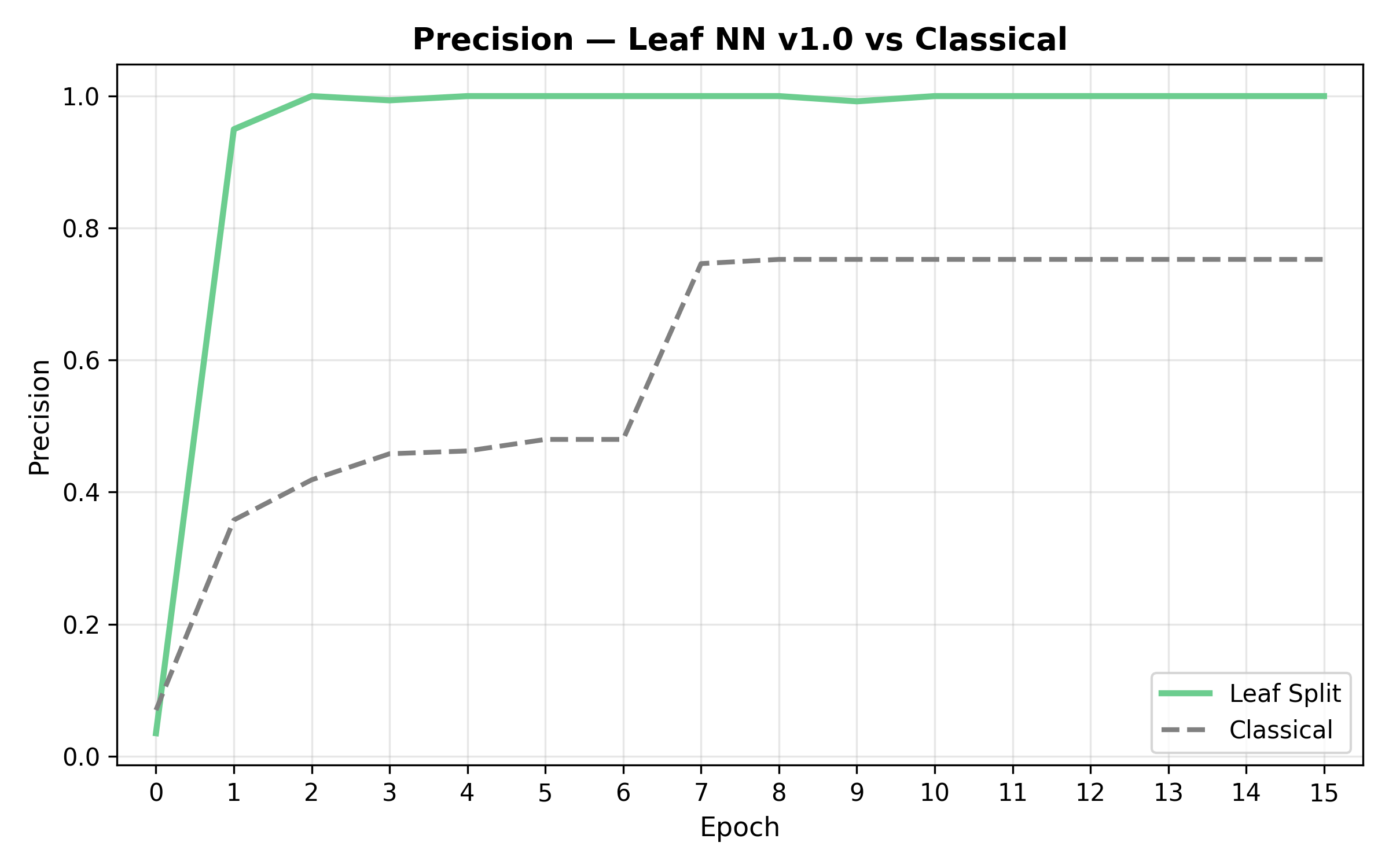

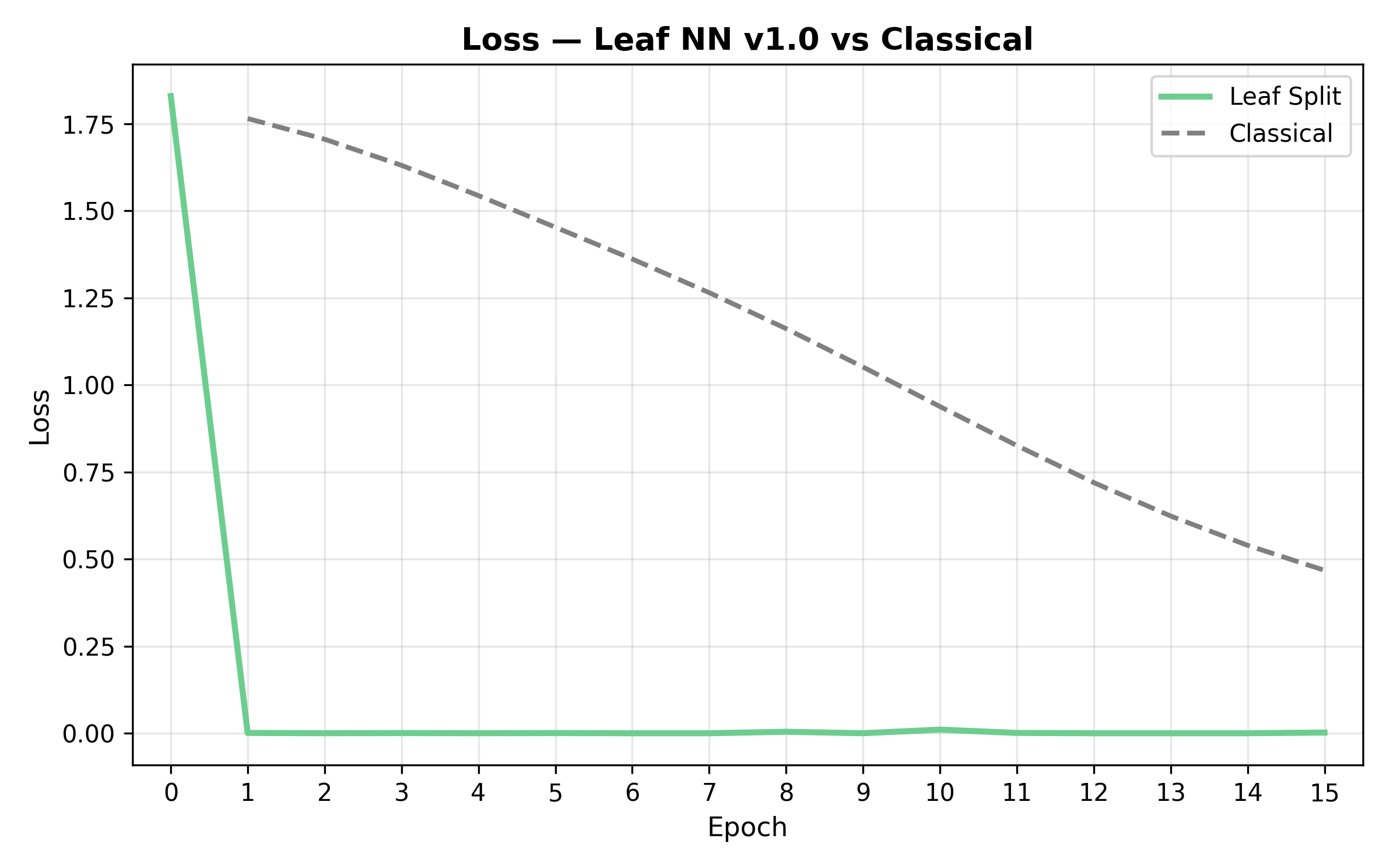

Classical Model: 83% (After 7th epoch)

LeafNet Model: 100% (After 2st epoch)

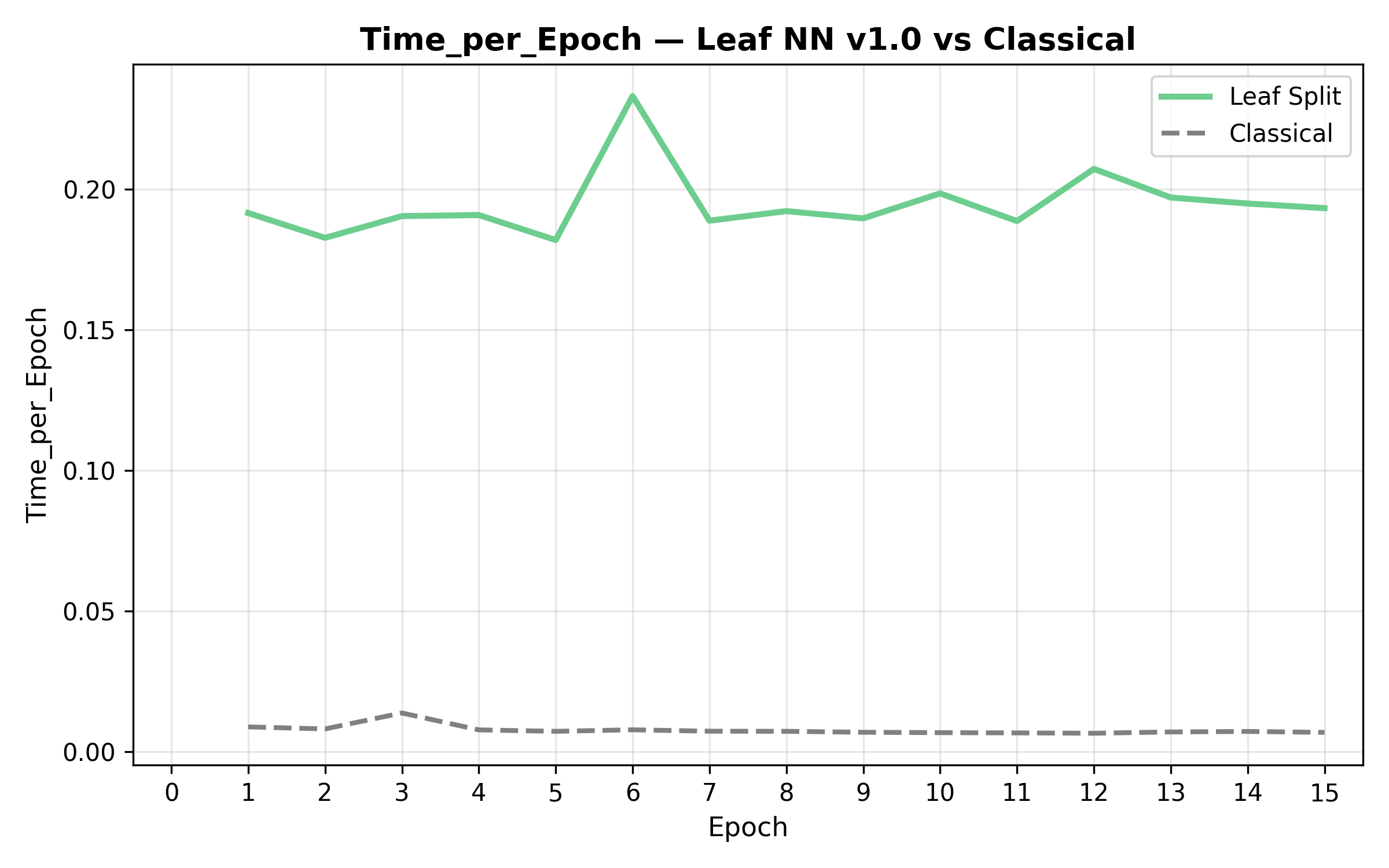

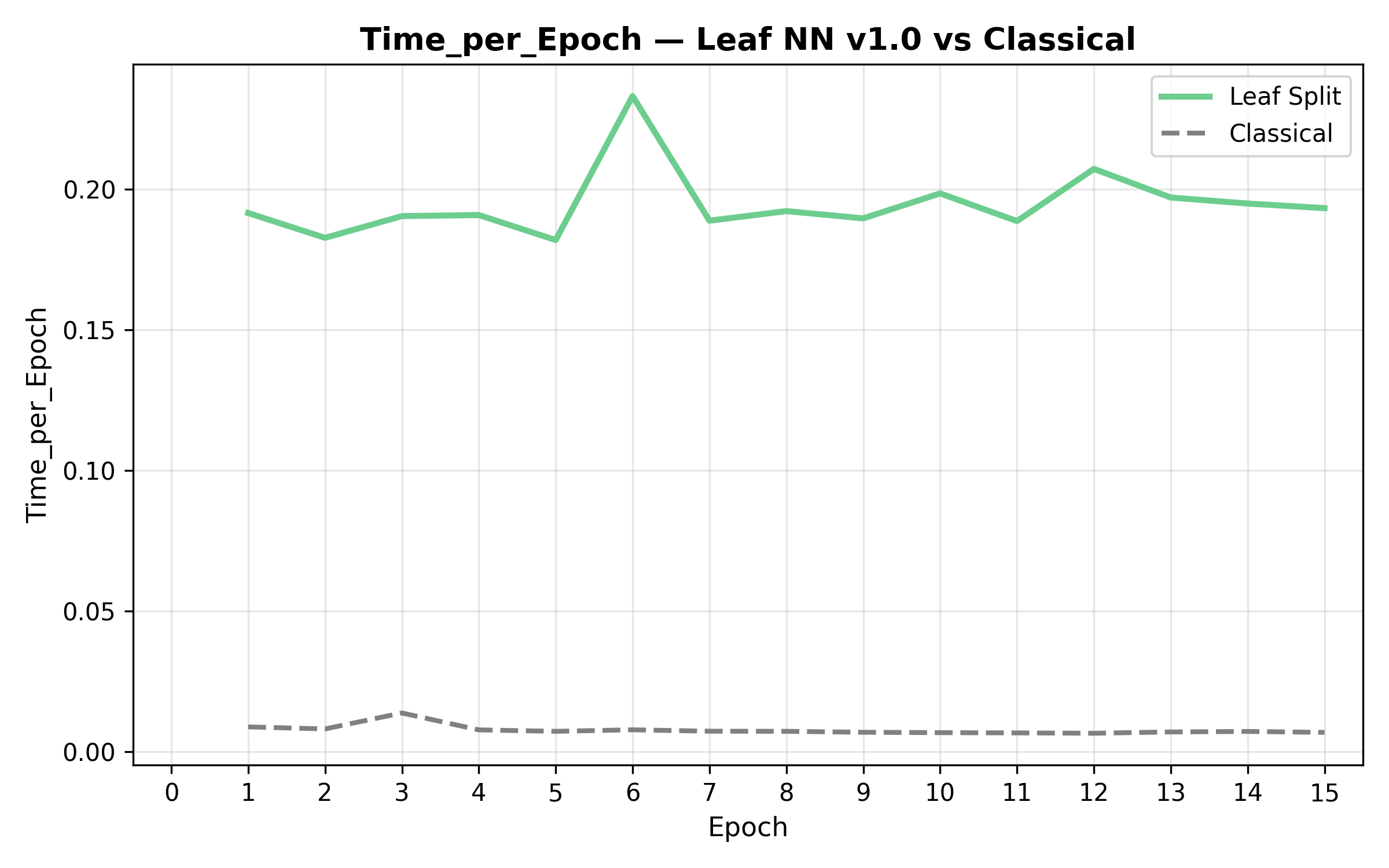

Training Time

Classical Model: ~0.2s per train

LeafNet Model: ~4s per train

1. Training time for LeafNet v1.0 is not fully optimized and will be improved in future versions. The next step is to make time consuming computation in low-level programing language instead of Python.

2. LeafNet has logarithmic parameters grouth that is why it will always smaller amout of parameters.